You’ve probably been tricked by fake news and don’t know it

If you spent Thanksgiving trying in vain to convince relatives that the Pope didn’t really endorse Donald Trump or that Hillary Clinton didn’t sell weapons to ISIS, fake news has already weaseled its way into your brain.

Those “stories” and other falsified news outperformed much of the real news on Facebook before the 2016 U.S. presidential election. And on Twitter, an analysis by University of Southern California computer scientists found that nearly 20 percent of election-related tweets came from bots, computer programs posing as real people and often spouting biased or fake news.

If you care about science, that’s a big problem. As daily news moves past the election, the fake news machine isn’t likely to shut down; it will just look for new kinds of attention-grabbing headlines. Fake news about climate change, vaccines and other hot-button science topics has already proven to get clicks.

And if you think only people on the opposite side of the political fence from you will fall for lies, think again. We all do it. Plenty of research shows that people are more likely to believe news if it confirms their preexisting political views, says cognitive scientist David Rapp of Northwestern University in Evanston, Ill. More surprising, though, are Rapp’s latest studies along with others on learning and memory. They show that when we read inaccurate information, we often remember it later as being true, even if we initially knew it was wrong. That misinformation can then bias us or affect our decisions.

So just reading fake news can taint you with misinformation. In several experiments, Rapp’s team asked people to read short anecdotes or statements that contained either correct information or untruths. One example of untruthiness: The capital of Russia is St. Petersburg. (It’s Moscow.) Then the researchers surprised these people with a trivia quiz, including some questions about the “facts.” It turns out that people who read the untruths consistently gave more incorrect answers than those who read true or unrelated information, even if they had looked up the correct information previously. Troublingly, these people also then tended to believe that they had already known those incorrect facts before the experiment, showing how easy it is to forget where you “learned” something.

Whether someone can identify the capital of Russia may not seem important, but assertions involving incorrect scientific concepts (such as “brushing your teeth too much can lead to gum disease”) worked the same way in the experiments, and people used the incorrect information to make judgments. So someone who hears over and over again that “trees cause more pollution than automobiles do” might use that incorrect fact to oppose environmental regulations. In fact, I worry that just by me repeating that tired old line, you’ll remember it.

Even more alarming, we also pick up incorrect information from pure fiction, such as novels. Research finds that when we read fictional stories, we don’t just remember facts and plotlines. We use remembered bits of information to make deductions about how the world works, like a sorely misguided Sherlock Holmes.

And again, knowing it’s fiction doesn’t help. This means we now have a public unwittingly armed to assess fake news about GMOs using a genetics lesson gleaned from Jurassic Park.

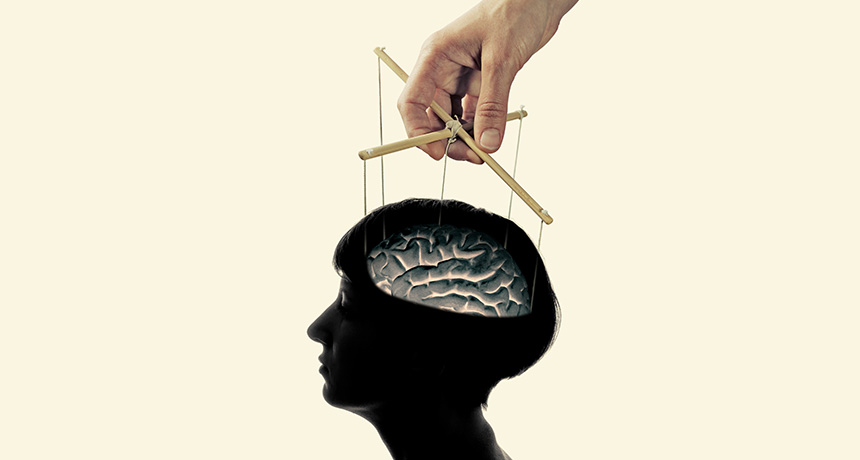

Part of the problem lies in how our brain forms memories. For one, the more often a message is repeated (say on Facebook and Twitter), the more likely we are to remember it, an effect called fluent retrieval. That’s fine, but then our brains go one step further. “When we can remember something more easily, we’re more likely to believe it’s true,” says Rapp. This is one of the reasons that the social media echo chamber is so effective. Repeat a lie often enough, and it starts to feel like truth.

“I’ve had cases on my own Facebook feed,” says Rapp, “where people repeat a message they disagree with in an attempt to prove it wrong, and they accidentally amplify it.”

If you think you’ve never shared fake news, I have more bad news. We’re pretty bad at distinguishing fake news from real on social media, says Emilio Ferrara of the University of Southern California in Los Angeles. Ferrara published his findings of widespread Twitter bots in the run-up to the election in First Monday, a computer science journal focused on the internet.

He found that human Twitter users retweeted messages from bots at the same rate as messages from real people, “which means that the average user most likely retweets this content unconditionally,” he says. “It was an unexpected finding, because as an informed reader, I don’t retweet everything I see. But we see systematic lack of a critical ability to distinguish sources.”

Are we doomed, then, to repeat fake news until it becomes real in our minds? Rapp says no, but we’ll need to dust off the critical-thinking skills we learned in grade school.

First, we have to work extra hard while reading to not only remember a fact, but to remember that it’s false. “One idea is that when we encode problematic information as memory, unless we tag it as ‘wrong,’ we might accidentally retrieve that wrong info as real,” Rapp says.

In his experiments, people were better at remembering which facts were false if they fact-checked and corrected information as they read, or at least highlighted incorrect facts.

Explicitly noticing when something might be untrue, and then making an effort to fact-check it, can go a long way, Rapp says. That could be as simple as doing a Google search or checking a site like Snopes.com. Taking mental note of a story’s source and whether it’s reliable helps, too. In general, reinforcing correct knowledge in our memory as we read and compartmentalizing incorrect facts into a “not true” mental category can help keep our brains from becoming a murky fact stew.

In the end, the solution to the fake news problem lies in our own brains. While Facebook and Google try to block fake news with algorithms and starve it of ad dollars, the only way to really curb it is for readers to recognize it, and not share it.